BucketArchiver utilizes industry-standard, POSIX.1-1988 compliant tar and RFC 1952/RFC 1951 compatible parallel gzip implementations. The generated archives are multi-platform compatible and can be accessed on:

BucketArchiver employs an AWS CloudFormation stack to manage its components. Interaction with BucketArchiver is performed through AWS CloudFormation interfaces (Console, CLI, API/SDK). Configurable parameters include:

Modifications to the CloudFormation stack can be made at any time post-deployment to adjust these parameters.

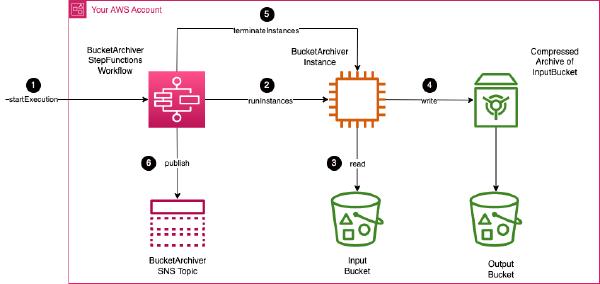

BucketArchiver orchestrates the archival workflow using an AWS Step Functions State Machine. Users can interact with the State Machine through AWS Step Functions interfaces (Console, CLI, API/SDK) to:

The State Machine provisions and terminates ephemeral EC2 instances as part of the archival workflow. These instances are optimized for archival tasks, leveraging parallel compression techniques on multi-core CPUs. The instances are based on an Amazon Linux 2023.

An Amazon SNS topic is provisioned to publish BucketArchiver State Machine execution results, including execution statuses and metadata. The SNS topic also supports optional email notifications.

BucketArchiver requires a VPC for EC2 instance provisioning. Two VPC deployment options are available:

The VPC must allow the EC2 instance to access public endpoints for the following AWS services:

Alternatively, a VPC tailored for BucketArchiver can be provisioned. This VPC features private subnets and AWS PrivateLink endpoints for secure, internal AWS service access. The VPC contains two subnets and each subnet includes the VPC endspoints:

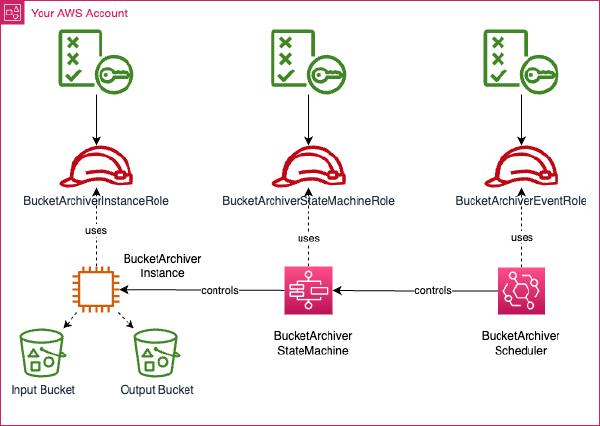

BucketArchiver is deployed within your AWS account, offering full control over data and compliance. IAM roles are provisioned for:

AWS CloudWatch is utilized for:

You can obtain the BucketArchiver CloudFormation template from the AWS Marketplace. There are two template variants available:

We provide a dedicated and interactive deployment guide here: (https://www.bucketarchiver.com/deployment-guide/)[Deployment Guide].

The deployment usually completes with 3-5 minutes.

| Parameter Name | Type | Default Value | Min/Max | Constraints | Description and Examples |

|---|---|---|---|---|---|

| InputBucket | String | None | 3-63 | [a-z0-9\-.]+ |

Input S3 bucket name. Must have 3-63 characters; can contain lowercase letters, numbers, hyphens, and periods. |

| OutputBucket | String | None | 3-63 | [a-z0-9\-.]+ |

Output S3 bucket name. Similar constraints as InputBucket. |

| ArchivePattern | String | ‘*’ | N/A | Custom Pattern | Defines file or directory pattern for archiving. E.g., '*' for all files, '*.txt' for all text files. |

| ArchiveName | String | ‘archive’ | 1-128 | Alphanumeric, -, _, . |

Specifies the archive name. Resulting file will be <YourSpecifiedName>_<ISODate>.tar.gz. E.g., ‘archive_2023-09-15T14-30-45Z.tar.gz’ if name is ‘archive’. |

| MaxExecutionTime | Number | 3600 | 1-86400 | N/A | Max time for archival process in seconds. Ranges from 1 second to 24 hours. |

| Scheduler | String | None | 1-256 | Specific Formats | Scheduling expression for triggering. E.g., specific time: at(2023-09-15T14:30:45), daily at specific time: cron(30 14 * * ? *), or every 5 days: rate(5 days). |

| EmailAddress | String | '' | N/A | Email Format | Optional. Receives notifications when archival completes. E.g., user@example.com or leave empty. |

| KMSKeysRestrictionList | CommaDelimitedList | ‘*’ | N/A | ARN Format or * |

Comma-separated list of KMS key ARNs to restrict access to. Use * for no restrictions. E.g., arn:aws:kms:REGION:ACCOUNT_ID:key/KEY_ID,arn:aws:kms:REGION:ACCOUNT_ID:key/ANOTHER_KEY_ID. |

| LogGroupRetentionDays | Number | 7 | N/A | 1, 3 | Number of days to retain log events in CloudWatch. Allowed values are 1 and 3. |

| InstanceType | String | ‘t2.micro’ | N/A | List of EC2 Types | EC2 instance type like m5.large, t3.small, etc. |

| Parameter Name | Type | Default Value | Min/Max | Constraints | Description and Examples |

|---|---|---|---|---|---|

| VpcCidrBlock | String | ‘10.10.10.0/24’ | N/A | CIDR Format | VPC CIDR block for EC2 instances, e.g., 10.0.0.0/24. |

| SubnetACidrBlock | String | ‘10.10.10.0/25’ | N/A | CIDR Format | CIDR block for Public Subnet A, e.g., 10.0.0.0/26. |

| SubnetBCidrBlock | String | ‘10.10.10.128/25’ | N/A | CIDR Format | CIDR block for Public Subnet B, e.g., 10.0.0.128/26. |

Deployment and continuous configuration of BucketArchiver are executed through CloudFormation. After deploying the CloudFormation template with the initial settings, you can modify these parameters as needed.

To update a CloudFormation stack due to parameter alterations, you typically adjust the stack’s parameters using the AWS Management Console, AWS CLI, or SDKs. After finalizing the changes, you pass the revised parameter values to CloudFormation. The service then contrasts the existing stack with the new parameters and adjusts the stack accordingly to match your updated settings.

Via AWS Console

Via AWS CLI

When updating the stack via the AWS CLI, provide the parameter key and value using the --parameters option:

aws cloudformation update-stack

--stack-name BucketArchiverStack-XXXYYY

--use-previous-template

--parameters ParameterKey=<KEY>,ParameterValue="<VALUE>"

The Scheduler parameter in the BucketArchiver CloudFormation template is designed to dictate when the archival process is triggered. This scheduling leverages the cron and rate and at expressions from Amazon EventBridge, granting you precise control over the archival start timing. Depending on your expression you will be able to achieve

rate(value unit)rate(1 day)cron(minutes hours day-of-month month day-of-week year)cron(0 18 ? * * *)The at() expression specifies a unique timestamp when an event should fire, ideal for one-off scheduled tasks.

at(yyyy-mm-ddThh:mm:ss)at(2023-09-01T15:30:00)Using at() expression yields a one time execution at a point in time. If you specify a past point in time the execution will never be started automatically but can be triggered using an on-demand execution of the BucketArchiver AWS StepFunctions state machine. Please note that selecting a past date in the at expression will immedatly trigger an execution.

If you intend to use BucketArchiver for on-demand operation only (using the AWS console, StepFunctions CLI/API, etc.) we advise to use the at expression and configure a date in the far future, such as: at(2099-12-31T00:00:00)

The on-demand workflow allows you to manually trigger the BucketArchiver AWS StepFunctions state machine as needed. Follow these steps to execute an on-demand archival in

REGION=$(aws configure get region)

ACCOUNT_ID=$(aws sts get-caller-identity --query "Account" --output text)

aws stepfunctions start-execution --state-machine-arn "arn:aws:states:$REGION:$ACCOUNT_ID:stateMachine:BucketArchiverStateMachine-<XXYYYY>" --name "Execution-1" --input '{}'

$REGION = (Get-AWSCredential).Region

$ACCOUNT_ID = (Get-STSCallerIdentity).Account

aws stepfunctions start-execution --state-machine-arn "arn:aws:states:$REGION:$ACCOUNT_ID:stateMachine:BucketArchiverStateMachine-<XXXYYY>" --name "Execution-1" --input '{}'

BucketArchiver integrates with CloudWatch metrics to streamline operational monitoring. In CloudWatch, metrics are organized into namespaces, serving as containers for specific metric categories. The BucketArchiver namespace contains:

Dimensions: InstanceId & InstanceType

cpu_usage_idle, cpu_usage_iowait, and more.netstat, these metrics (e.g., tcp_established, net_bytes_recv) offer a comprehensive view of network activity.ethtool, metrics such as rx_packets, tx_packets, and several “allowance exceeded” indicators shed light on network interface performance.Dimensions: InputBucket (indicating the source S3 bucket) & RunTimestamp (marking the task’s conclusion timestamp).

BucketArchiver logs important events and information to Amazon CloudWatch Logs. You can access the logs in the CloudWatch console under the /BucketArchiver-<CloudFormationStackId> log group. This log group contains the excecution log of the AWS Step Functions state machine and the output of the compression process on the EC2 instance.

The following log streams are created as part of the log group and can be used for troubleshooting purposes:

| Log Stream Name | Use Case | Notes |

|---|---|---|

states/BucketArchiverStateMachine/<date>/<execution-id> |

Log events of BucketArchiver state machine | |

invoke/<instance-id>/log |

Log events of BucketArchiver EC2 invocation process | Use this to understand which objects where included in archival process. |

archive/<input-bucket-name>/<date> |

Log events of BucketArchiver archival and compression process | Use this to understand potential S3 bucket access permissions. |

BucketArchiver internally uses a parallel compression tool capable of exploiting multiple CPU cores, providing a more scalable and faster compression of your buckets then traditional approaches. Compression and archival performance is dependant on multiple factors such as

We adise the following procedure for optimizing effiency

When selecting an EC2 instance type for your BucketArchiver workflows, consider factors such as CPU, memory, and network performance. With the CloudFormation template we have preselected a wide selection of EC2 instance types that should provide optimum price/performance for BucketArhive operation.

BucketArchiver supports archival workflows across different AWS regions. Keep in mind that cross-region data transfers may impact performance and incur additional data transfer costs. The dedicated VPC deployment variant is limited to accessing S3 buckets in the same region as the CloudFormation stack.

While we maintain a very trimmed down version of the Amazon Linux based AMI for BucketArchiver and the instances deployed form this AMI are only emphemeral every now and then an patch update will be required. If there’s a crucial update for the BucketArchiver AMI, primarily related to critical CVEs (Common Vulnerabilities and Exposures), AWS Marketplace will send out a notification. Once you are notified we will provide a set of updates CloudFormation templates that use an AMI that is updated and not affected by the CVE. Updating BuckerArchiver involves updating the template of all BucketArchiver CloudFormation stacks that you have deployed.

Initial Release

Pick from our deployment options at AWS marketplace.